re: pre-processingAll this talk of pre-processing, etc. Is this supposed to happen on the DLS and change the original file to "fix" them? And them everyone will download a clean copy?

Isn't that what the DLS cleanup is all about? Yeh, it's a slow manual process but even if it could be automated, I think you'd still want someone to go in one at a time and validate to make sure nothing was broken. And since other people own the assets, there is still the permission thing to take care of.

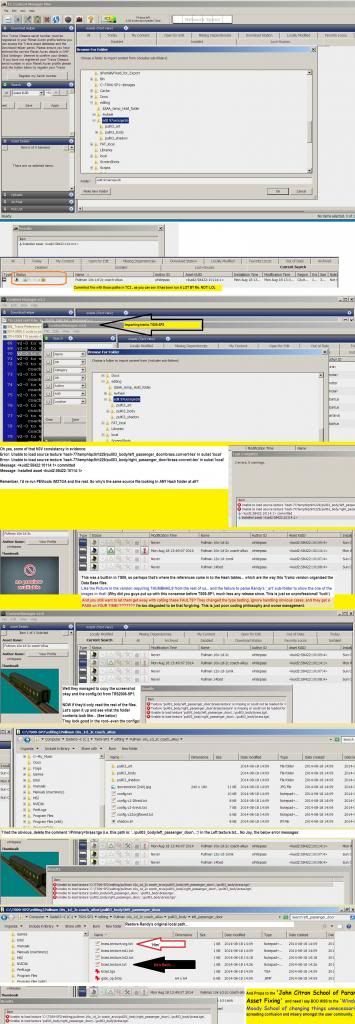

No, when the files are input locally, by CDP, imported, whatever. THAT will catch all the extant 'so-called-errors' that are curable by automated pre-processing. Why they were removed in the first place and then sold as Improved Fault checking and no one called them on it in 2009 is the mystery to me.

Basically,

Test the existence of a config.org.txt, it present, process config.txt normally but with a smarter bit of code that can find things like textures in different folders ala TRAINS--TC3 input stages. If missing, copy the config as config.org.txt then process to a translated version--the new config.txt base file for the asset. Auto cleaned and in the data forms they know how to reconfigure Bogey--> bogies { '0', ..., etc.

Either way, don't burden the public with unnecessary error messages and warnings that should have been handled but for the code changes in the input process. The warnings and such they do give, should only be given if running CM in a developer mode--Justin of J-R had issues about that aspect as v3.8 was calling some new things warnings in newly purchased payware.

They seem to have once had the idea that changed data forms would make the legacy content just go away--and stubbornly kept pushing it as increased error testing. Not so Joe, wrong approach, pure and simple. THIS COMMUNITY has paid the price for that CON job--fixing assets with curable issues.

The real benefit is you take as your output a validated binary loadable--in effect an object file and instead of reparsing the lines in the chump, bring in a block of binary data that fits inside your control structure of that KIND asset in one binary read operation. That Header and KIND data then tells you how to Input associated strings or array data (passenger stations have variable numbers of people, for example, so the binary header like the container contains that value and an offset to the start of the table (arrays)--and declare the array(s) to fit.

Where the block of data value is a pointer to such unpredictable elements, an null value (as it was saved to disk) indicates you aren't done, or the data element doesn't apply in that KIND. C/C++ handles this kind of manipulation easily. The struct points to that variable length element since it's unpredictable--and that is likely how they handle that already now. It's simply a necessary evil with variable length data elements. I first pointed this out last JULY in a thread on elimination of remarks in config files... Windwalkr has been thinking on it, and had before. The data storage per asset in the chump is likely zero effect for small assets, and only a 4K bump for non-route larger assets, if they are compressing text as they should be. The 4K threshold is the minimum disk space taken by a one byte file since HDD's broke the 2 Gb threshold... about y2k! // Frank